Blog Archives

This AI Ain’t It

Wilhelm wrote a post called “The Folly of Believing in AI” and is otherwise predicting an eventual market crash based on the insane capital spent chasing that dragon. The thesis is simple: AI is expensive, so… who is going to pay for it? Well, expensive and garbage, which is the worst possible combination. And I pretty much agree with him entirely – when the music stops, there will be many a child left without a chair but holding a lot of bags, to mix metaphors.

The one problematic angle I want to stress the most though, is the fundamental limitation of AI: it is dependent upon the data it intends to replace, and yet that data evolves all the time.

Duh, right? Just think about it a bit more though. The best use-case I have heard for AI has been from programmers stating that they can get code snippets from ChatGPT that either work out of the box, or otherwise get them 90% of the way there. Where did ChatGPT “learn” code though? From scraping GitHub and similar repositories for human-made code. Which sounds an awful like what a search engine could also do, but nevermind. Even in the extremely optimistic scenario in which no programmer loses their jobs to future Prompt Engineers, eventually GitHub is going to start (or continue?) to accumulate AI-derived code. Which will be scraped and reconsumed into the dataset, increasing the error rate, thereby lowering the value that the AI had in the first place.

Alternatively, let’s suppose there isn’t an issue with recycled datasets and error rates. There will be a lower need for programmers, which means less opportunity for novel code and/or new languages, as it would have to compete with much cheaper, “solved” solution. We then get locked into existing code at current levels of function unless some hobbyists stumble upon the next best thing.

The other use-cases for AI are bad in more obvious, albeit understandable ways. AI can write tailored cover letters for you, or if you’re feeling extra frisky, apply for hundreds of job postings a day on your behalf. Of course, HR departments around the world fired the first shots of that war when they started using algorithms to pre-screen applications, so this bit of turnabout feels like fair play. But what is the end result? AI talking to AI? No person can or will manually sort through 250 applications per job opening. Maybe the most “fair” solution will just be picking people randomly. Or consolidating all the power into recruitment agencies. Or, you know, just nepotism and networking per usual.

Then you get to the AI-written house listings, product descriptions, user reviews, or office emails. Just look at this recent Forbes article on how to use ChatGPT to save you time in an office scenario:

- Wrangle Your Inbox (Google how to use Outlook Rules/filters)

- Eliminate Redundant Communication (Ooo, Email Templates!)

- Automate Content Creation (spit out a 1st draft on a subject based on prompts)

- Get The Most Out Of Your Meetings (transcribe notes, summarize transcriptions, create agendas)

- Crunch Data And Offer Insights (get data analysis, assuming you don’t understand Excel formulas)

The article states email and meetings represent 15% and 23% of work time, respectively. Sounds accurate enough. And yet rather than address the glaring, systemic issue of unnecessary communication directly, we are to use AI to just… sort of brute force our way through it. Does it not occur to anyone that the emails you are getting AI to summarize are possibly created by AI prompts from the sender? Your supervisor is going to get AI to summarize the AI article you submitted, have AI create an agenda for a meeting they call you in for, AI is going to transcribe the meeting, which will then be emailed to their supervisor and summarized again by AI. You’ll probably still be in trouble, but no worries, just submit 5000 job applications over your lunch break.

In Cyberpunk 2077 lore, a virus infected and destroyed 78.2% of the internet. In the real world, 90% of the internet will be synthetically generated by 2026. How’s that for a bearish case for AI?

Now, I am not a total Luddite. There are a number of applications for which AI is very welcome. Detecting lung cancer from a blood test, rapidly sifting through thousands of CT scans looking for patterns, potentially using AI to create novel molecules and designer drugs while simulating their efficacy, and so on. Those are useful applications of technology to further science.

That’s not what is getting peddled on the street these days though. And maybe that is not even the point. There is a cynical part of me that questions why these programs were dropped on the public like a free hit from the local drug dealer. There is some money exchanging hands, sure, and it’s certainly been a boon for Nvidia and other companies selling shovels during a gold rush. But OpenAI is set to take a $5 billion loss this year alone, and they aren’t the only game in town. Why spend $700,000/day running ChatGPT like a loss leader, when there doesn’t appear to be anything profitable being led to?

[Fake Edit] Totally unrelated last week news: Microsoft, Apple, and Nvidia are apparently bailing out OpenAI in another round of fundraising to keep them solvent… for another year, or whatever.

I think maybe the Dead Internet endgame is the point. The collateral damage is win-win for these AI companies. Either they succeed with the AGI moonshot – the holy grail of AI that would change the game, just like working fusion power – or fill the open internet with enough AI garbage to permanently prevent any future competition. What could a brand new AI company even train off of these days? Assuming “clean” output isn’t now locked down with licensing contracts, their new model would be facing off with ChatGPT v8.5 or whatever. The only reasonable avenue for future AI companies would be to license the existing datasets themselves into perpetuity. Rent-seeking at its finest.

I could be wrong. Perhaps all these LLMs will suddenly solve all our problems, and not just be tools of harassment and disinformation. Considering the big phone players are making deepfake software on phones standard this year, I suppose we’ll all find out pretty damn quick.

My prediction: mo’ AI, mo’ problems.

AI Ouroboros, Reddit Edition

Last year, if you recall, there was a mod-led protest at Reddit over some ham-fisted changes from the admins. Specifically, the admins implemented significant costs/throttles on API calls such that no 3rd-party Reddit app would have been capable of surviving. Even back then it was known that the admins were snuffing out competition ahead of an eventual Reddit IPO.

Well, that time is nigh. If you want a piece of an 18-year old social media company that has never posted a profit – $18m revenue, -$90m net losses last year – you can (eventually) purchase $RDDT.

But that’s not the interesting thing. What’s interesting is that Google just purchased a license to harvest AI training material from Reddit, to the tune of $60 million/year. And who is Reddit’s 3rd-largest shareholder currently? Sam Altman, of OpenAI (aka ChatGPT) fame. It’s not immediately clear whether OpenAI has or even needs a similar license, but Altman owns twice as many shares as the current CEO of Reddit so it probably doesn’t matter. In any case, that’s two of the largest AI feeding off Reddit.

In many ways, leveraging Reddit was inevitable. It’s been an open secret for years that Google search results have been in decline, even before Google started plastering advertisements six layers deep. Who knew that when you allowed people to get certified in Search Engine Optimization, that eventually search results would turn to shit? Yeah, basically everyone. One of the few ways around that though was to seed your search with +Reddit, which returned Reddit posts on the topic at hand. Were these intrinsically better results? Actually… yes. A site with weaponized SEO wins when they get your click. But even though there are bots and karma whores and reposts and all manner of other nonsense on Reddit, fundamentally posts must receive upvotes to rise to the top, which is an added layer of complexity that SEO itself does not help. Real human input from people who otherwise have no monetary incentive to contribute is much more likely to float to the top and be noticed.

Of course, anyone who actually spends any amount of time on Reddit will understand the downsides of using it for AI training purposes. One of the most upvoted comments on the Reddit post about this:

starstarstar42 3237 points 1 day ago*

Good luck with that, because vinyl siding eats winter squid and obsequious ladyhawk construction twice; first on truck conditioners and then with presidential urology.

Edit: I people my found have

That’s all a bit of cheeky fun, which will undoubtedly be filtered away by the training program. Probably.

What may not be filtered away as easily are the many hundreds/thousands of posts made by bot accounts that already repost the same comment from other people in the same thread. I’m not sure how or why it works, but the reposted content sometimes becomes higher rated than the original; perhaps there is some algorithm to detect a trending comment, which then gets copied and boosted with upvotes from other bot accounts? In any case, karma farming in this automated way allows the account to be later sold to others who need such (disposable) accounts to post in more specialized sub-Reddits that otherwise require certain limits to post anything (e.g. account has to be 6+ months old and/or have 200+ karma, etc). Posts from these “mature” accounts as less obviously from bots.

While that may not seem like a big deal at first, the endgame is the same as with SEO: gaming the system. The current bots try to hijack human posts to farm karma. The future bots will be posting human-like responses generated by AI to farm karma. Hell, the reinforcement mechanism is already there, e.g. upvotes! Meanwhile, Google and OpenAI will be consuming Reddit content which itself will consist of more and more of their own AI output. The mythological Ouroboros was supposed to represent a cycle of death and rebirth, but the AI version is more akin to a dog eating its own shit.

I suppose sometime in the future its possible for the tech-bro handlers or perhaps the AI itself to recognize (via reinforcement) that they need to roll back one iteration due to consuming too much self-content. Perhaps long-buried AOL chatroom logs and similar backups would become the new low-background steel, worth its weight in gold Bitcoin.

Then again, it may soon be an open question of how much non-AI content even exists on the internet anymore, by volume. This article mentions experts expect 90% of the internet to be “synthetically generated” by 2026. As in, like, 2 years from now. Or maybe it’s already happened, aka Dead Internet.

[Fake Edit] So… I wrote almost exactly this same post a year ago. I guess the update is: it’s happening.

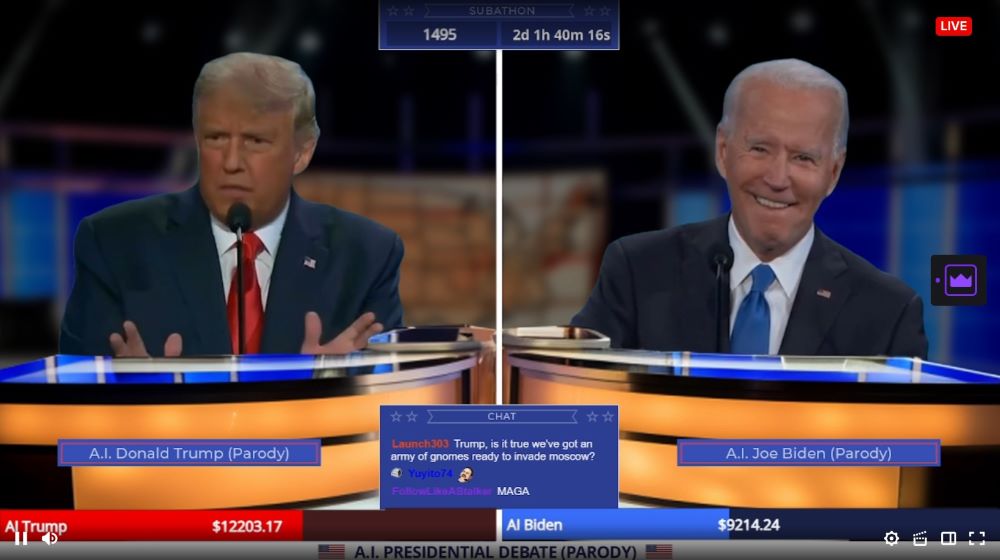

AI Presidential Debate

Oh boy, the future is now:

Welcome to TrumpOrBiden2024, the ultimate AI generated debate arena where AI Donald Trump and AI Joe Biden battle it out 24/7 over topics YOU suggest in the Twitch chat!

https://www.twitch.tv/trumporbiden2024

It’s best to just view a few minutes of it yourself, but this rank absurdity is an AI-driven “deep-fake” endless debate between Trump and Biden, full of NSFW profanity, and somehow splices in topics from Twitch chat. As the Kotaku article mentions:

The things the AI will actually argue about seem to have a dream logic to them. I heard Biden exclaim that Trump didn’t know anything about Pokémon, so viewers shouldn’t trust him. Trump later informed Biden that he couldn’t possibly handle genetically modified catgirls, unlike him. “Believe me, nobody knows more about hentai than me,” Trump declared. Both men are programmed to loosely follow the conversation threads the other sets, and will do all the mannerisms you’ve come to expect out of these debates, like seeing Biden react to a jab with a small chuckle. At one point during my watch, I saw the AI stop going at each other only to start tearing into people in the chat for having bad usernames and for not asking real questions.

It’s interesting how far we have come as a society and culture. At one point, deep-fakes were a major concern. Now, between ChatGPT, Midjourney/Stable Diffusion, and basic Instagram filters, there is a sort of democratization of AI taking place. Granted, most of these tools were given out for free to demonstrate the value of the groups who wish to eventually be bought up by multinationals, but the things developed in such a short time is nevertheless amazing.

Of course, this all may well be the calm before the storm. Elon Musk’s lawyers tried to argue that video of him claiming that Teslas could drive autonomously “right now” (in 2016) were in fact deep-fakes. The judge was not amused and said Elon should then testify under oath that it wasn’t him in the video. The deep-fake claim was walked back quickly. But it is just a matter of time before someone gets arrested or sentenced based on AI-fabricated evidence and when it comes out as such, things will get wild.

Or maybe it won’t. Impersonators have been around for thousands of years, people get thrown into jail on regularly-fabricated evidence all the time, and the threat of perjury is one of those in-your-face crimes that tend to keep people honest (or quiet) on the stand.

I suppose in the meantime, it’s memetime. Until the internet dies.

ChatGPT

Came across a Reddit post entitled “Professor catches student cheating with ChatGPT: ‘I feel abject terror’”. Among the comments was one saying “There is a person who needs to recalibrate their sense of terror.” The response to that was this:

Although I am bearish on the future of the internet in general with AI, the concerns above just sort of made me laugh.

When it comes to doctors and lawyers, what matters are results. Even if we assume ChatGPT somehow made someone pass the bar or get a medical license – and they further had no practical exam components/residency for some reason – the ultimate proof is the real world application. Does the lawyer win their cases? Do the patients have good health outcomes? It would certainly suck to be the first few clients that prove the professionals had no skills, but that can usually be avoided by sticking to those with a positive record to begin with.

And let’s not pretend that fresh graduates who did everything legit are always going to be good at their jobs. It’s like the old joke: what do you call the person who passed medical school with a C-? “Doctor.”

The other funny thing here is the implicit assumption that a given surgeon knowing which drug to administer is better than an AI chatbot. Sure, it’s a natural assumption to make. But surgeons, doctors, and everyone in-between are constantly lobbied (read: bribed) by drug companies to use their new products instead. How many thousands of professionals started over-prescribing OxyContin after attending “all expenses paid” Purdue-funded conferences? Do you know which conferences your doctor has attended recently? Do they even attend conferences? Maybe they already use AI, eh?

Having said that, I’m not super-optimistic about ChatGPT in general. A lot of these machine-learning algorithms get their base data from publicly-available sources. Once a few of the nonsense AI get loosed in a Dead Internet scenario, there is going to be a rather sudden Ouroboros situation where ChatGPT consumes anti-ChatGPT nonsense in an infinite loop. Maybe the programmers can whitelist a few select, trustworthy sources, but that limits the scope of what ChatGPT would be able to communicate. And even in the best case scenario, doesn’t that mean tight, private control over the only unsullied datasets?

Which, if you are catering to just a few, federated groups of people anyway, maybe that is all you need.

N(AI)hilism

May 26

Posted by Azuriel

Wilhelm has a post up about how society has essentially given up the future to AI at this point. One of the anecdotes in there is about how the Chicago Sun-Times had a top-15 book lists that only included 5 real books. The other is about how some students at Columbia University admitted they complete all of their course-work via AI, to make more time for the true reason they enrolled in an Ivy League school: marriage and networking. Which, to be honest, is probably the only real reason to be going to college for most people. But at least “back in the day” one may have accidentally learned something.

From a concern perspective, all of this is almost old news. Back in December I had a post up about how the Project Zomboid folks went out of their way to hire a human artist who turned around and (likely) used AI to produce some or all of the work. Which you would think speaks to a profound lack of self-preservation, but apparently not. Maybe they were just ahead of the curve.

Which leads me to the one silver-lining when it comes to the way AI has washed over and eroded the foundations of our society: at least it did so in a manner that destroys its own competitive advantage.

For example, have you see the latest coming from Google’s Veo 3 video AI generation? Among the examples of people goofing around was this pharmaceutical ad for “Puppramin,” a drug to treat depression by encouraging puppies to arrive at your doorstep.

Is it perfect? Of course not. But as the… uh, prompt engineer pointed out on Twitter, these sort of ads used to cost $500,000 and take a team of people to produce over months, but this one took a day and $500 in AI credits. Thing is, you have to ask what is eventual outcome? If one company can reduce their ad creation costs by leveraging AI, so can all the others. You can’t even say that the $499,500 saved could be used to purchase more ad space, because everyone in the industry is going to have that extra cash, so bids on timeslots or whatever will increase accordingly.

It all reminds me about the opening salvo in the AI wars: HR departments. When companies receive 180 applications for every job posting, HR started utilizing algorithms to filter candidates. All of a sudden, if you knew the “tricks” and keywords to get your resume past said filter, you had a significant advantage. Now? Every applicant can use AI to construct a filter-perfect resume, tailored cover letter, and apply to 500 companies over their lunch break. No more advantage.

At my own workplace, we have been mandated to take a virtual course on AI use ahead of a deployment of Microsoft Claude. The entire time I was watching the videos, I kept thinking “what’s the use case for this?” Some of the examples in the videos were summarization of long documents, creating reports, generating emails, and the normal sort of office stuff. But, again, it all calls into question what problem is being solved. If I use Claude to generate an email and you use Claude to summarize it, what even happened? Other than a colossal waste of resources, of course.

Near as I can tell, there are only two endgoals available for this level of AI. The first we can see with Musk’s Grok, where the AI-owners can put their thumbs (more obviously) on the scale to direct people towards skinhead conspiracy theories. I can imagine someone with less ketamine-induced brain damage would be more subtle, nudging people towards products/politicians/etc that have bent the knee and/or paid the fee. The second endgoal is presumably to actually make money someday… somehow. Currently, zero of the AI companies out there make any profit. Most of them are free to use right now though, and that could possibly change in the future. If the next generation of students and workers are essentially dependent on AI to function, suddenly making ChatGPT cost $1000 to use would reintroduce the competitive advantage.

…unless the AI cat is already out of the bag, which it appears to be.

In any case, I am largely over it. Not because I foresee no negative consequences from AI, but because there is really nothing to be done at this point. If you are one of the stubborn holdouts, as I have been, then you will be ran over by those who aren’t. Nobody cares about the environmental impacts, the educational impacts, the societal impacts. But what else is new?

We’re all just here treading water until it reaches boiling temperature.

Posted in Commentary

3 Comments

Tags: AI, ChatGPT, Google Veo 3, Nihilism