Blog Archives

Blarghest

The last time I officially joined Blaugust was back in 2015. Back then, the conclusion I came to was that it wasn’t really worth the effort: posting every single day for a month did not meaningfully increase page views. I’m not trying to chase page views per se, but you can’t become a fan of something if you don’t know about it. Discoverability is a real issue, especially if you don’t want to juice SEO metrics in suspect ways. So, on a lark, I decided to rejoin Blaugust nine years later (e.g. this year) to at least throw my hat back in the ring and try to expand my (and others’) horizons.

What I’m finding is not particularly encouraging.

More specifically, I was looking at the list of participants. I’m not going to name names, but more than a few of the dozen I’ve browsed thus far appear to be almost nakedly commercial blogs (e.g. affiliate-linked), AI-based news aggregate sites, and similar nonsense. I’m not trying to be the blogging gatekeeper here, but is there no vetting process to keep out the spam? I suppose that may be a bit much when 100+ people/bots sign up, but it also seems deeply counter-productive to the mission statement of:

Posting regularly builds a community and in this era of AI-slop content, our voices are needed even more than we ever have been at any point in the past.

Ahem. The calls are coming from inside the house, my friends.

[Fake Edit] In fairness, after getting through all 76 of the original list, the number of spam blogs did not increase much. Perhaps a non-standard ordering mechanism would have left a better first impression.

Anyway, we’ll have to see how this Blaugust plays out. I have added 10-20 new blogs to my Feedly roll, and am interested to see where they go from here. Their initial stuff was good enough for my curiosity. The real trick though, is who is still posting in September.

Forever Winter

Going to be adding Forever Winter onto my list of games that look cool that I’m probably never going to actually play:

I say that because it’s being described as a “co-op tactical squad-based survival horror shooter.” I have zero interest in playing with randoms anymore, let alone in a scenario that allows for an entirely novel way of griefing, e.g. making too much noise and getting caught by AI horrors.

Conceptually though? Game is Badass with a capital B.

While the above trailer looks amazing, it was actually this video that hit hardest:

In short, it seems like most of the people that are working on the game are concept artists that finally get to implement their concept art. A battle tank draped in bound, naked corpses? Flamethrower troops in spacesuits with American flags draped over their face? Yes, please. Granted, I did not quite see any of those in the gameplay reveal trailer, so who knows if they actually follow-through.

It will be interesting to see how the game ultimately shakes out. I’m a big fan of grimdark, post-apocalypse looting. Having to be weary of getting into fights that are impossible to win is also compelling. But there will need to be a real trick on how that translates into long-term fun. Will there be a story mode or overall plot? The trailers seem to indicate you may end up fighting “bosses” eventually, which provides something of a “why” to grind out whatever resources. But if the whole of the game lends itself to not attacking things, and possibly punishes you for doing so, it will be tricky to land the transition without feeling like the game itself turned into something else. Sort of like when you have a stealth game that suddenly has a stealth-less boss fight (Deus Ex: Human Revolution), or a traditional FPS with an annoying stealth level (too many to mention).

Regardless, I will be following Forever Winter with interest.

AI Ouroboros, Reddit Edition

Last year, if you recall, there was a mod-led protest at Reddit over some ham-fisted changes from the admins. Specifically, the admins implemented significant costs/throttles on API calls such that no 3rd-party Reddit app would have been capable of surviving. Even back then it was known that the admins were snuffing out competition ahead of an eventual Reddit IPO.

Well, that time is nigh. If you want a piece of an 18-year old social media company that has never posted a profit – $18m revenue, -$90m net losses last year – you can (eventually) purchase $RDDT.

But that’s not the interesting thing. What’s interesting is that Google just purchased a license to harvest AI training material from Reddit, to the tune of $60 million/year. And who is Reddit’s 3rd-largest shareholder currently? Sam Altman, of OpenAI (aka ChatGPT) fame. It’s not immediately clear whether OpenAI has or even needs a similar license, but Altman owns twice as many shares as the current CEO of Reddit so it probably doesn’t matter. In any case, that’s two of the largest AI feeding off Reddit.

In many ways, leveraging Reddit was inevitable. It’s been an open secret for years that Google search results have been in decline, even before Google started plastering advertisements six layers deep. Who knew that when you allowed people to get certified in Search Engine Optimization, that eventually search results would turn to shit? Yeah, basically everyone. One of the few ways around that though was to seed your search with +Reddit, which returned Reddit posts on the topic at hand. Were these intrinsically better results? Actually… yes. A site with weaponized SEO wins when they get your click. But even though there are bots and karma whores and reposts and all manner of other nonsense on Reddit, fundamentally posts must receive upvotes to rise to the top, which is an added layer of complexity that SEO itself does not help. Real human input from people who otherwise have no monetary incentive to contribute is much more likely to float to the top and be noticed.

Of course, anyone who actually spends any amount of time on Reddit will understand the downsides of using it for AI training purposes. One of the most upvoted comments on the Reddit post about this:

starstarstar42 3237 points 1 day ago*

Good luck with that, because vinyl siding eats winter squid and obsequious ladyhawk construction twice; first on truck conditioners and then with presidential urology.

Edit: I people my found have

That’s all a bit of cheeky fun, which will undoubtedly be filtered away by the training program. Probably.

What may not be filtered away as easily are the many hundreds/thousands of posts made by bot accounts that already repost the same comment from other people in the same thread. I’m not sure how or why it works, but the reposted content sometimes becomes higher rated than the original; perhaps there is some algorithm to detect a trending comment, which then gets copied and boosted with upvotes from other bot accounts? In any case, karma farming in this automated way allows the account to be later sold to others who need such (disposable) accounts to post in more specialized sub-Reddits that otherwise require certain limits to post anything (e.g. account has to be 6+ months old and/or have 200+ karma, etc). Posts from these “mature” accounts as less obviously from bots.

While that may not seem like a big deal at first, the endgame is the same as with SEO: gaming the system. The current bots try to hijack human posts to farm karma. The future bots will be posting human-like responses generated by AI to farm karma. Hell, the reinforcement mechanism is already there, e.g. upvotes! Meanwhile, Google and OpenAI will be consuming Reddit content which itself will consist of more and more of their own AI output. The mythological Ouroboros was supposed to represent a cycle of death and rebirth, but the AI version is more akin to a dog eating its own shit.

I suppose sometime in the future its possible for the tech-bro handlers or perhaps the AI itself to recognize (via reinforcement) that they need to roll back one iteration due to consuming too much self-content. Perhaps long-buried AOL chatroom logs and similar backups would become the new low-background steel, worth its weight in gold Bitcoin.

Then again, it may soon be an open question of how much non-AI content even exists on the internet anymore, by volume. This article mentions experts expect 90% of the internet to be “synthetically generated” by 2026. As in, like, 2 years from now. Or maybe it’s already happened, aka Dead Internet.

[Fake Edit] So… I wrote almost exactly this same post a year ago. I guess the update is: it’s happening.

AI Presidential Debate

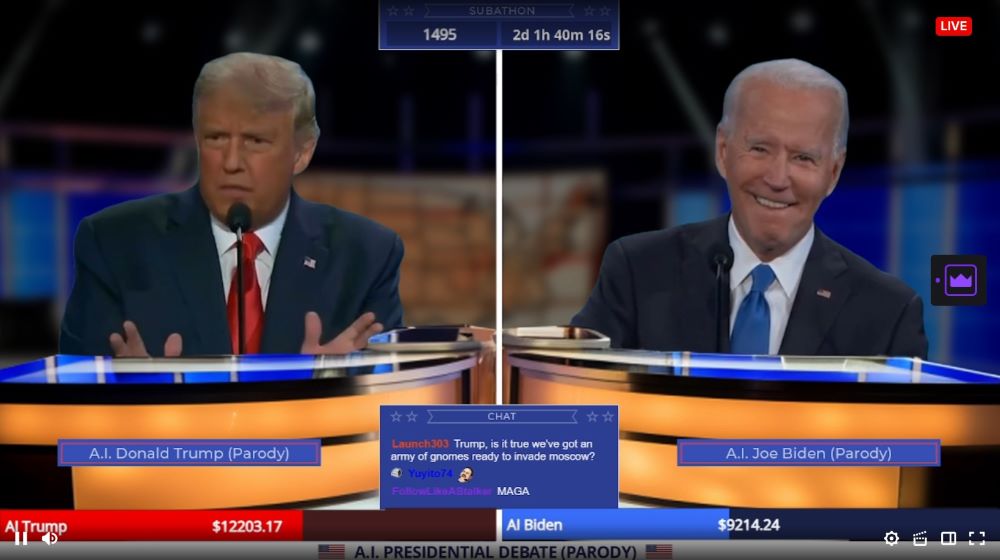

Oh boy, the future is now:

Welcome to TrumpOrBiden2024, the ultimate AI generated debate arena where AI Donald Trump and AI Joe Biden battle it out 24/7 over topics YOU suggest in the Twitch chat!

https://www.twitch.tv/trumporbiden2024

It’s best to just view a few minutes of it yourself, but this rank absurdity is an AI-driven “deep-fake” endless debate between Trump and Biden, full of NSFW profanity, and somehow splices in topics from Twitch chat. As the Kotaku article mentions:

The things the AI will actually argue about seem to have a dream logic to them. I heard Biden exclaim that Trump didn’t know anything about Pokémon, so viewers shouldn’t trust him. Trump later informed Biden that he couldn’t possibly handle genetically modified catgirls, unlike him. “Believe me, nobody knows more about hentai than me,” Trump declared. Both men are programmed to loosely follow the conversation threads the other sets, and will do all the mannerisms you’ve come to expect out of these debates, like seeing Biden react to a jab with a small chuckle. At one point during my watch, I saw the AI stop going at each other only to start tearing into people in the chat for having bad usernames and for not asking real questions.

It’s interesting how far we have come as a society and culture. At one point, deep-fakes were a major concern. Now, between ChatGPT, Midjourney/Stable Diffusion, and basic Instagram filters, there is a sort of democratization of AI taking place. Granted, most of these tools were given out for free to demonstrate the value of the groups who wish to eventually be bought up by multinationals, but the things developed in such a short time is nevertheless amazing.

Of course, this all may well be the calm before the storm. Elon Musk’s lawyers tried to argue that video of him claiming that Teslas could drive autonomously “right now” (in 2016) were in fact deep-fakes. The judge was not amused and said Elon should then testify under oath that it wasn’t him in the video. The deep-fake claim was walked back quickly. But it is just a matter of time before someone gets arrested or sentenced based on AI-fabricated evidence and when it comes out as such, things will get wild.

Or maybe it won’t. Impersonators have been around for thousands of years, people get thrown into jail on regularly-fabricated evidence all the time, and the threat of perjury is one of those in-your-face crimes that tend to keep people honest (or quiet) on the stand.

I suppose in the meantime, it’s memetime. Until the internet dies.

ChatGPT

Came across a Reddit post entitled “Professor catches student cheating with ChatGPT: ‘I feel abject terror’”. Among the comments was one saying “There is a person who needs to recalibrate their sense of terror.” The response to that was this:

Although I am bearish on the future of the internet in general with AI, the concerns above just sort of made me laugh.

When it comes to doctors and lawyers, what matters are results. Even if we assume ChatGPT somehow made someone pass the bar or get a medical license – and they further had no practical exam components/residency for some reason – the ultimate proof is the real world application. Does the lawyer win their cases? Do the patients have good health outcomes? It would certainly suck to be the first few clients that prove the professionals had no skills, but that can usually be avoided by sticking to those with a positive record to begin with.

And let’s not pretend that fresh graduates who did everything legit are always going to be good at their jobs. It’s like the old joke: what do you call the person who passed medical school with a C-? “Doctor.”

The other funny thing here is the implicit assumption that a given surgeon knowing which drug to administer is better than an AI chatbot. Sure, it’s a natural assumption to make. But surgeons, doctors, and everyone in-between are constantly lobbied (read: bribed) by drug companies to use their new products instead. How many thousands of professionals started over-prescribing OxyContin after attending “all expenses paid” Purdue-funded conferences? Do you know which conferences your doctor has attended recently? Do they even attend conferences? Maybe they already use AI, eh?

Having said that, I’m not super-optimistic about ChatGPT in general. A lot of these machine-learning algorithms get their base data from publicly-available sources. Once a few of the nonsense AI get loosed in a Dead Internet scenario, there is going to be a rather sudden Ouroboros situation where ChatGPT consumes anti-ChatGPT nonsense in an infinite loop. Maybe the programmers can whitelist a few select, trustworthy sources, but that limits the scope of what ChatGPT would be able to communicate. And even in the best case scenario, doesn’t that mean tight, private control over the only unsullied datasets?

Which, if you are catering to just a few, federated groups of people anyway, maybe that is all you need.

Dead Internet

There are two ways to destroy something: make it unusable, or reduce its utility to zero. The latter may be happening with the internet.

Let’s back up. I was browsing a Reddit Ask Me Anything (AMA) thread by a researcher who worked on creating “AI invisibility cloak” sweaters. The goal was to design “adversarial patterns” that essentially tricked AI-based cameras from no longer recognizing that a person was, in fact, a person. During the AMA though, they were asked what they thought about language-model AI like GPT-3. The reply was:

I have a few major concerns about large language models.

– Language models could be used to flood the web with social media content to promote fake news. For example, they could be used to generate millions of unique twitter or reddit responses from sockpuppet accounts to promote a conspiracy theory or manipulate an election. In this respect, I think language models are far more dangerous than image-based deep fakes.

This struck me as interesting, as I would have assumed deep-faked celebrity endorsements – or even straight-up criminal framing – would have been a bigger issue for society. But… I think they are right.

There is a conspiracy theory floating around for a number of years called “The Dead Internet Theory.” This Atlantic article explains in more detail, but the premise is that the internet “died” in 2016-2017 and almost all content since then has been generated by AI and propagated by bots. That is clearly absurd… mostly. First, I feel like articles written by AI today are pretty recognizable as being “off,” let alone what the quality would have been five years ago.

Second, in a moment of supreme irony, we’re already pretty inundated with vacuous articles written by human beings trying to trick algorithms, to the detriment of human readers. It’s called “Search Engine Optimization” and it’s everywhere. Ever wonder why cooking recipes on the internet have paragraphs of banal family history before giving you the steps? SEO. Are you annoyed when a piece of video game news that could have been summed up with two sentences takes three paragraphs to get to the point? SEO. Things have gotten so bad though that you pretty much have to engage in SEO defensively these days, lest you get buried on Page 27 of the search results.

And all of this is (presumably) before AI has gotten involved belting out 10,000 articles a second.

A lot has already been said about polarization in US politics and misinformation in general, but I do feel like the dilution of utility of the internet has played a part in that. People have their own confirmation biases, yes, but it also true that when there is so much nonsense everywhere, that you retreat to the familiar. Can you trust this news outlet? Can you trust this expert citing that study? After a while, it simply becomes too much to research and you end up choosing 1-2 sources that you thereafter defer to. Bam. Polarization. Well, that and certain topics – such as whether you should force a 10-year old girl to give birth – afford no ready compromises.

In any case, I do see there being a potential nightmare scenario of a Cyberpunk-esque warring AI duel between ones engaging in auto-SEO and others desperately trying to filter out the millions of posts/articles/tweets crafted to capture the attention of whatever human observers are left braving the madness between the pockets of “trusted” information. I would like to imagine saner heads would prevail before unleashing such AI, but… well… *gestures at everything in general.*

The Nature of Art

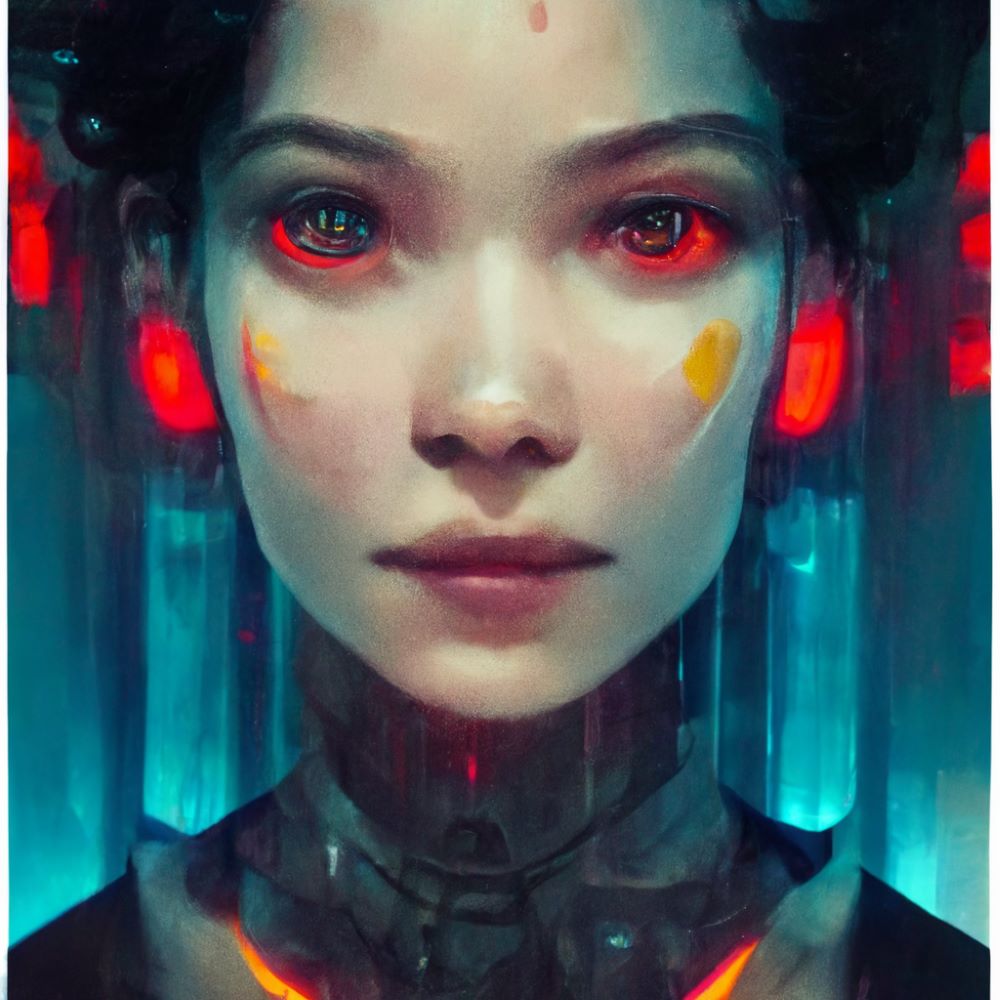

The following picture recently won 1st place at the Colorado State Fair:

Don’t know about you, but that looks extremely cool. I could totally see picking up a print of that on canvas and hanging it on my wall, if I were still in charge of decorating my house. Reminds me a bit of the splash screens for Guild Wars 2, which I have always enjoyed.

By the way, that picture was actually generated by an AI called Midjourney.

Obviously people are pissed. Part of that is based on the seeming subterfuge of someone submitting AI-generated artwork as their own. Part is based on the broader existential question that arises from computers beating humans at creative tasks (on top of Chess). Another part is probably because the dude who submitted the work sounds like a huge douchebag:

“How interesting is it to see how all these people on Twitter who are against AI generated art are the first ones to throw the human under the bus by discrediting the human element! Does this seem hypocritical to you guys?” […]

“I’m not stopping now” […] “This win has only emboldened my mission.”

It is true that there will probably just be an “AI-generated” category in the future and that will be that.

What fascinates me about the Reddit thread though, is how a lot of the comments are saying that the picture is “obviously” AI-generated, that it looks shitty, that it lacks meaning. For example:

It reminds me of an article I read about counterfeit art years ago. Most of the value of a piece of artwork is tied up into its history and continuity – a Monet is valuable because it came from Monet’s hand across the ages to your home. Which is understandable from a monetary perspective. But if you just like a Monet piece because of the way it makes you feel when looking at it, the authenticity does not matter. After all, most of us have probably only seen reproductions or JPEGs of his works anyway.

At a certain point though, I have to ask the deeper question… what is a “Monet” exactly?

Monet is rather famous, of course, and his style is distinctive. But aside from a few questions on my high school Art exam decades ago, I do not know anything about his life, his struggles, his aspirations. Did he die in poverty? Did he retire early in wealth? Obviously I can Google this shit at any time, but my point is this: I like The Water Lily Pond. The way it looks, the softness of the scene, the way it sort of pulls you into a season of growth you can practically smell. Who painted it and why couldn’t matter less to me, other than possibly wanting to know where I could find similar works of this quality.

This may just say more about me than it does art in general.

I have long held the position that I do not have favorite bands, I have favorite songs. I have favorite games, not studios or directors. I have favorite movies, not actors. Some of that is probably a defense mechanism – there are many an artist who turn out to be raging assholes, game companies that “betray” your “trust,” and so on. If part of the appeal of a given work is wrapped up in the creator(s), then a fall from grace and the resultant dissonance is a doubled injury. Kevin Spacey is not going to ruin my memories of American Beauty or The Usual Suspects, for example. I may have a jaundiced eye towards anything new, or perhaps towards House of Cards if I ever got around to watching that, as some things cannot be unlearned or fully compartmentalized (or should be).

So in a way, I for one welcome our new AI-art overlords.

Unlike the esteemed Snoo-4878, I do not presume that any given human artist actually adds emotion or intention into their art, or whether its presence enhances the experience at all. How would you even know they were “adding emotion?” I once won a poetry contest back in high school with something I whipped up in 30 minutes, submitted solely for extra credit in English class. Seriously, my main goal was that the first letter of each line spelled out “Humans, who are we?” Granted, I am an exceptionally gifted writer. Humble, too. But from that experience I kind of learned that the things that should matter… don’t. Second place was this brilliant emo chick who basically wrote poetry full-time. Her submission was clearly full of intention and personal emotion and it basically didn’t matter. Why would it? Art is largely about what the audience feels. And if those small-town librarians felt more emotions when hit by big words I chose because they sounded cool, that’s what matters.

Also, it’s low-key possible the emo chick annoyed the librarians on a daily basis, Vogon-style, and so they picked the first thing out of the pile that could conceivably have “won” instead of hers.

In any case, there are limits and reductionist absurdities to my pragmatism. I do not believe Candy Crush Saga is a better game than Xenogears, just because the former made billions of dollars and the latter did not. And if the value of something is solely based on how it makes you feel, then art should probably just be replaced by wires in our head (in the future) or microdoses of fentanyl (right now).

But I am also not going to pretend that typing “hubris of man monolith stars” and getting this:

…isn’t impressive as fuck. Not quite Monet, but it’s both disturbing and inspiring, simultaneously.

Which was precisely what I was going for when I made it.

Zombie Smarts

I have been playing some more 7 Days to Die (7DTD) now that the Alpha 17.1 patch came around. There have been a lot of tweaks to the progression mechanics and Perk system, including some level-gating on Iron/Steel tool recipes. The biggest change, however, was to zombie AI.

In short, zombies are now impossibly smart… and impossibly dumb.

It’s been long enough that I don’t even remember how zombies behaved in prior patches. What zombies do now though, is behave in perfect tower defense intelligence: the shortest distance between them and you, with walls adding a virtual number of steps. Zombie are also perfectly prescient, knowing exactly which wall blocks have the lowest remaining health, and will attack that spot en mass to get to you. At the same time, zombies prefer not attacking walls to X extent, if they can walk there instead.

The result? Cue the Benny Hill theme:

Essentially, the current 7DTD meta is to not create bases at all, but rather mazes that funnel zombies into kill zones and/or large drops that loop them around until they die of fall damage. The devs have added a “zombie tantrum” mechanic to try and get some damage on looped mazes – zombies will attack anything nearby when they fall, possibly weakening your support pillars – but that will be metagamed away with multiple platforms or deeper holes.

To be clear, the prior zombie meta was solved by simply building an underground bunker. At that time, zombies could not dig into the ground, and disregarded the Z axis entirely – it was possible to hang out in the middle of a bridge and often have a nice grouped pile of zombies directly below you to hit with a Molotov. I played the game enough to recognize which Point of Interest had a pre-built bunker located underneath it, and often sought it out immediately after spawning so I could all but ignore the titular 7th day horde attack.

That said, how smart should zombies be?

The only way to answer that question is to ask what the game you’re making is supposed to be about. When you add tower defense mechanics, you get a tower defense game. This will preclude people from building nice little houses in the woods, and instead opt for mazes and obstacles and drops. It becomes a much more technical game, solvable with very specific configurations. Having dumber zombies frees up a lot more base designs, on top of possibly requiring a lot more attention to one’s base after an attack, as a single “dumb” zombie could be weakening a support in an unused corner.

My initial “solution” would be to mix and match, but I think that’s actually the worst of all possible worlds. Instead, I think zombies work best as environmental hazards. Bunkers might make you invulnerable to nightly attacks… but you have to leave sometime. Shouldn’t the punishment for hiding underground be the simple lack of information of what’s going on, combined with having to spend your morning hours slaying the zombie hordes milling about outside?

I guess we’ll see what the devs eventually decide. At present, there simply seems to be a maze-based arms race at the expense of any sort of satisfying nesting. If the 7DTD devs want to double-down, well… thank god for mods.

Dynamic vs Random

Keen has another post up lamenting the stagnant nature of modern MMO game design, while suggesting devs should instead be using ideas from games that came out 15+ years ago and nobody plays today. Uh… huh. This time the topic is mob AI and how things would be so much better if mobs behaved randomly dynamically!

Another idea for improving mob AI was more along the lines of unpredictable elements influencing monster behavior. “A long list of random hidden stats would affect how mobs interact. Using the orc example again, one lone orc that spots three players may attack if his strength and bravery stats are high while intelligence is low. A different orc may gather friends.” I love the idea of having visible cues for these traits such as bigger orcs probably having more bravery, and scrawny orcs having more magical abilities or intelligence — intelligence would likely mean getting friends before charging in alone.

The big problem with dynamic behavior in games is that it’s often indistinguishable from random behavior from the player’s perspective. One of the examples from Keen’s post is about having orcs with “hidden stats” like Bravery vs Intelligence that govern whether they fight against multiple players or call for backup. Why bother? Unless players have a Scan spell or something, there is no difference between carefully-structured AI behavior and rolling a d20 to determine whether an orc runs away. Nevermind how the triggers being visible (via Scan or visual cues) undermine all sense of dynamism. Big orc? Probably not running away. If the orc does run away, that’s just bad RNG.

There is no way past this paradox. If you know how they are going to react based on programming logic, the behavior is not unpredictable. If the behavior is unpredictable, even if it’s governed by hidden logic, it is indistinguishable from pure randomness. Besides, the two absolute worst mob behaviors in any game are A) when mobs run away at low health to chain into other mobs, and B) when there is no sense to their actions. Both of which are exactly what is being advocated for here.

I consider the topic of AI in games generally to be one of those subtle designer/player traps. It is trivially easy to create an opponent that a human player could never win against. Creating an opponent that taxes a player to their limit (and not beyond) is much more difficult, and the extent to which a player can be taxed varies by the player. From a defeated player’s perspective, there is no difference between an enemy they aren’t skilled enough to beat and an unbeatable enemy.

You have to ask yourself what you, as a hypothetical designer, are actually trying to accomplish. That answer should be “to have my intended audience have fun.” Unpredictable and tough mobs can be fun for someone somewhere, sure, but as Wildstar is demonstrating, perhaps that doesn’t actually include all that many people. Having to memorize 10+ minute raid dances is bad enough without tacking convoluted mob behavior outside of raids on top. Sometimes you just want to kill shit via a fun combat system.

Themepark MMO players enjoy simple, repetitive tasks – news at 11.

This AI Ain’t It

Sep 11

Posted by Azuriel

Wilhelm wrote a post called “The Folly of Believing in AI” and is otherwise predicting an eventual market crash based on the insane capital spent chasing that dragon. The thesis is simple: AI is expensive, so… who is going to pay for it? Well, expensive and garbage, which is the worst possible combination. And I pretty much agree with him entirely – when the music stops, there will be many a child left without a chair but holding a lot of bags, to mix metaphors.

The one problematic angle I want to stress the most though, is the fundamental limitation of AI: it is dependent upon the data it intends to replace, and yet that data evolves all the time.

Duh, right? Just think about it a bit more though. The best use-case I have heard for AI has been from programmers stating that they can get code snippets from ChatGPT that either work out of the box, or otherwise get them 90% of the way there. Where did ChatGPT “learn” code though? From scraping GitHub and similar repositories for human-made code. Which sounds an awful like what a search engine could also do, but nevermind. Even in the extremely optimistic scenario in which no programmer loses their jobs to future Prompt Engineers, eventually GitHub is going to start (or continue?) to accumulate AI-derived code. Which will be scraped and reconsumed into the dataset, increasing the error rate, thereby lowering the value that the AI had in the first place.

Alternatively, let’s suppose there isn’t an issue with recycled datasets and error rates. There will be a lower need for programmers, which means less opportunity for novel code and/or new languages, as it would have to compete with much cheaper, “solved” solution. We then get locked into existing code at current levels of function unless some hobbyists stumble upon the next best thing.

The other use-cases for AI are bad in more obvious, albeit understandable ways. AI can write tailored cover letters for you, or if you’re feeling extra frisky, apply for hundreds of job postings a day on your behalf. Of course, HR departments around the world fired the first shots of that war when they started using algorithms to pre-screen applications, so this bit of turnabout feels like fair play. But what is the end result? AI talking to AI? No person can or will manually sort through 250 applications per job opening. Maybe the most “fair” solution will just be picking people randomly. Or consolidating all the power into recruitment agencies. Or, you know, just nepotism and networking per usual.

Then you get to the AI-written house listings, product descriptions, user reviews, or office emails. Just look at this recent Forbes article on how to use ChatGPT to save you time in an office scenario:

The article states email and meetings represent 15% and 23% of work time, respectively. Sounds accurate enough. And yet rather than address the glaring, systemic issue of unnecessary communication directly, we are to use AI to just… sort of brute force our way through it. Does it not occur to anyone that the emails you are getting AI to summarize are possibly created by AI prompts from the sender? Your supervisor is going to get AI to summarize the AI article you submitted, have AI create an agenda for a meeting they call you in for, AI is going to transcribe the meeting, which will then be emailed to their supervisor and summarized again by AI. You’ll probably still be in trouble, but no worries, just submit 5000 job applications over your lunch break.

In Cyberpunk 2077 lore, a virus infected and destroyed 78.2% of the internet. In the real world, 90% of the internet will be synthetically generated by 2026. How’s that for a bearish case for AI?

Now, I am not a total Luddite. There are a number of applications for which AI is very welcome. Detecting lung cancer from a blood test, rapidly sifting through thousands of CT scans looking for patterns, potentially using AI to create novel molecules and designer drugs while simulating their efficacy, and so on. Those are useful applications of technology to further science.

That’s not what is getting peddled on the street these days though. And maybe that is not even the point. There is a cynical part of me that questions why these programs were dropped on the public like a free hit from the local drug dealer. There is some money exchanging hands, sure, and it’s certainly been a boon for Nvidia and other companies selling shovels during a gold rush. But OpenAI is set to take a $5 billion loss this year alone, and they aren’t the only game in town. Why spend $700,000/day running ChatGPT like a loss leader, when there doesn’t appear to be anything profitable being led to?

[Fake Edit] Totally unrelated last week news: Microsoft, Apple, and Nvidia are apparently bailing out OpenAI in another round of fundraising to keep them solvent… for another year, or whatever.

I think maybe the Dead Internet endgame is the point. The collateral damage is win-win for these AI companies. Either they succeed with the AGI moonshot – the holy grail of AI that would change the game, just like working fusion power – or fill the open internet with enough AI garbage to permanently prevent any future competition. What could a brand new AI company even train off of these days? Assuming “clean” output isn’t now locked down with licensing contracts, their new model would be facing off with ChatGPT v8.5 or whatever. The only reasonable avenue for future AI companies would be to license the existing datasets themselves into perpetuity. Rent-seeking at its finest.

I could be wrong. Perhaps all these LLMs will suddenly solve all our problems, and not just be tools of harassment and disinformation. Considering the big phone players are making deepfake software on phones standard this year, I suppose we’ll all find out pretty damn quick.

My prediction: mo’ AI, mo’ problems.

Posted in Commentary, Philosophy

2 Comments

Tags: AI, ChatGPT, Dead Internet Theory, Large Language Models, Luddite, What Could Possibly Go Wrong?